Artificial intelligence (AI) is no longer a futuristic concept. It quietly shapes how we live, work, and make decisions. From detecting hate speech online to analyzing legal documents or scanning medical images, AI influences some of the most fundamental aspects of human life. Yet, the same algorithms that promise efficiency and fairness can also entrench discrimination, amplify misinformation, or erode privacy.

At its core, this debate is about human rights. The rights we all hold simply because we are human. As the United Nations defines them, these universal rights apply to everyone, regardless of nationality, gender, or belief. They include essentials such as food, health, and safety, as well as freedoms that give life meaning: education, work, and liberty.

When developed and used responsibly, AI can be a powerful ally in protecting these rights. It helped to track human rights abuses in conflict zones, using satellite imagery to quantify village destruction in Darfur and thermal imaging to monitor ethnic violence in Myanmar. It can also make education more accessible for people with disabilities, as UNESCO highlights, and uncover patterns of corruption that might otherwise go unnoticed.

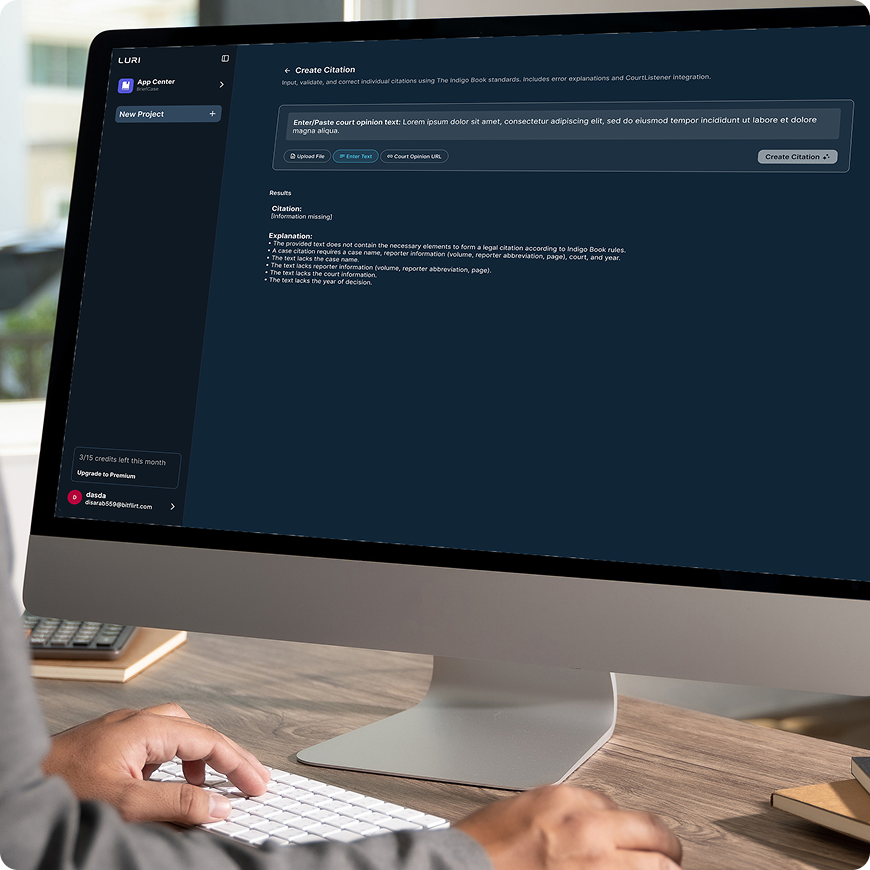

In the legal field, platforms like Luri® offer an example of responsible AI in practice. By assisting legal professionals, researchers, and individuals with document analysis, information retrieval, and case preparation, Luri® helps expand access to legal information and reduce barriers to justice. This illustrates how AI, when applied thoughtfully, can enhance human judgment, efficiency, and fairness, supporting decision-making rather than replacing it.

But the same technology that empowers can also endanger. Algorithms may unintentionally amplify existing inequalities, leading to unfair hiring decisions or biased access to opportunities. Deepfakes and misinformation campaigns can distort democratic debate and public trust. And as automation grows, empathy and human judgment risk being pushed aside.

A striking example comes from Brazil, where federal judge Jefferson Rodrigues used ChatGPT to draft a judicial ruling. The AI-generated text contained serious errors, including incorrect citations, misattributed decisions from the Superior Court of Justice, and missing legal context. The case sparked public concern about the unregulated use of generative AI in judicial processes. When technology is relied upon without proper oversight, it can jeopardize rights such as due process, equality before the law, and access to justice.

Al's dual nature, its ability to both empower and endanger human rights, makes strong ethical and legal safeguards indispensable. Protecting privacy, ensuring fairness, and maintaining human oversight must remain central to every innovation. The goal is not to hold progress back, but to guide it, so that technology continues to serve humanity and uphold the principles of justice, dignity, and equality.